Flow inside and out

This year marked the completion and publication of Flow Engineering, co-authored with Andrew Davis, after many years of practice, research, and collaboration. Flow Engineering is now practiced in all types of companies from Starbucks to Nykredit to Toyota.

Shortly after the launch of Flow Engineering, The Lean-Agile Way was released, co-authored with Cecil ‘Gary’ Rupp, Richard Knaster, and Al Shalloway. The book dives deep into Lean-Agile principles and value stream management to drive tangible business outcomes - something enterprises still struggle to integrate as we enter 2025. It was a chance to build upon the structure and dynamics of value stream networks we touch on in Flow Engineering.

Flow Engineering has scaled up and out, reaching into full reorganization in a few of our engagements, reaching into 2025. Stay tuned for case studies and learning as we move into next year. Send me a note if you’re interested in specifics

We’re building out the future of flow, together, in person. March 3-7, in a program of Intensive Flow Engineering for Leaders we have very limited spots for sale - I hope we see you there!

Data highlights from 2024

A huge step forward for AI and platform engineering, tempered by complexity

Puppet state of DevOps Report

~40,000 respondents

Focused on Platform Engineering

Platforms have expanded and proliferated

Building security and compliance in: 51% of respondents indicate that platform teams are responsible for enforcing software and tool versions for security updates and 43% of respondents report having a dedicated security and compliance team within their platform

52% of respondents say that a product manager is crucial to the success of the platform team

65% of respondents report that the platform team is important and will receive continued investment

DORA State of DevOps Report

~39,000 respondents

High-performance cluster shrinking from 31% to 22% and the low-performance cluster growing from 17% to 25%

For the first time, the medium performance cluster has a lower change failure rate than the high performance cluster

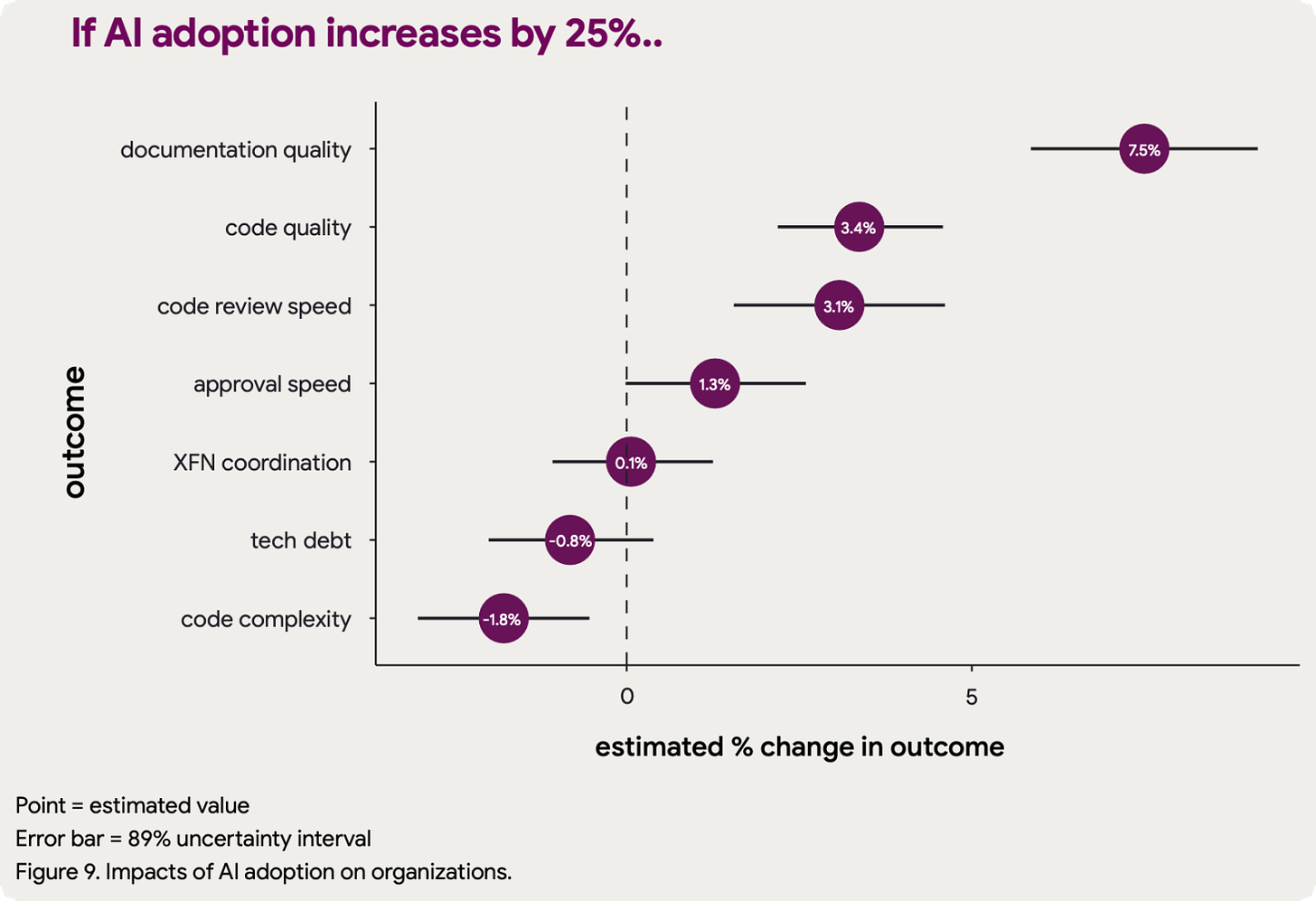

While AI enhances individual productivity and job satisfaction, it paradoxically worsens overall software delivery performance

More than 75 percent of respondents said that they rely on AI for at least one daily professional responsibility

39.2% of respondents indicated distrust in AI-generated code

AI adoption correlates with larger batch sizes, which introduce higher risk but it also results in dramatically higher documentation quality

More correlation between a positive developer experience, improved product quality, productivity, and reduced burnout

JetBrains Dev Ecosystem

23,262 developers worldwide

Does your company measure developer experience and developer productivity (either for individuals or teams)? Over 50% say no

How many developers use ChatGPT or Copilot while programming? 69%

How much of your working time do you spend on activities directly involving code? 19% say 71-80%

How much of your working time do you spend on meetings, work-related chats, and emails? 33% say 10%–20%

These final two dimensions seem surprisingly low, but we rarely get a chance to see into developer calendars. I keep waiting for a survey based on anonymized calendar and IDE data…

State of Project to Product

600 respondents

Only 3% of survey respondents said nothing stands in the way of their transformation

More than 50% of organizations are just starting or experimenting with a product operating model

Just 10% said they have a structured approach to managing dependencies across teams and value streams

2/3 can’t explain how their work results in positive business outcomes or have few leaders who can articulate the business drivers

Just 15% said all products have automated, independent paths to production

DX Core 4

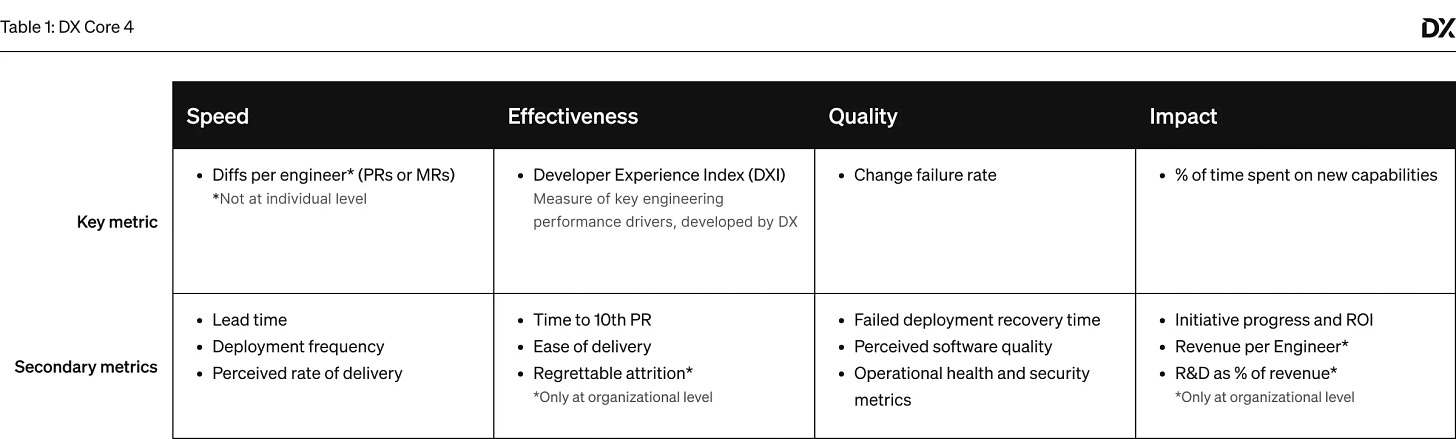

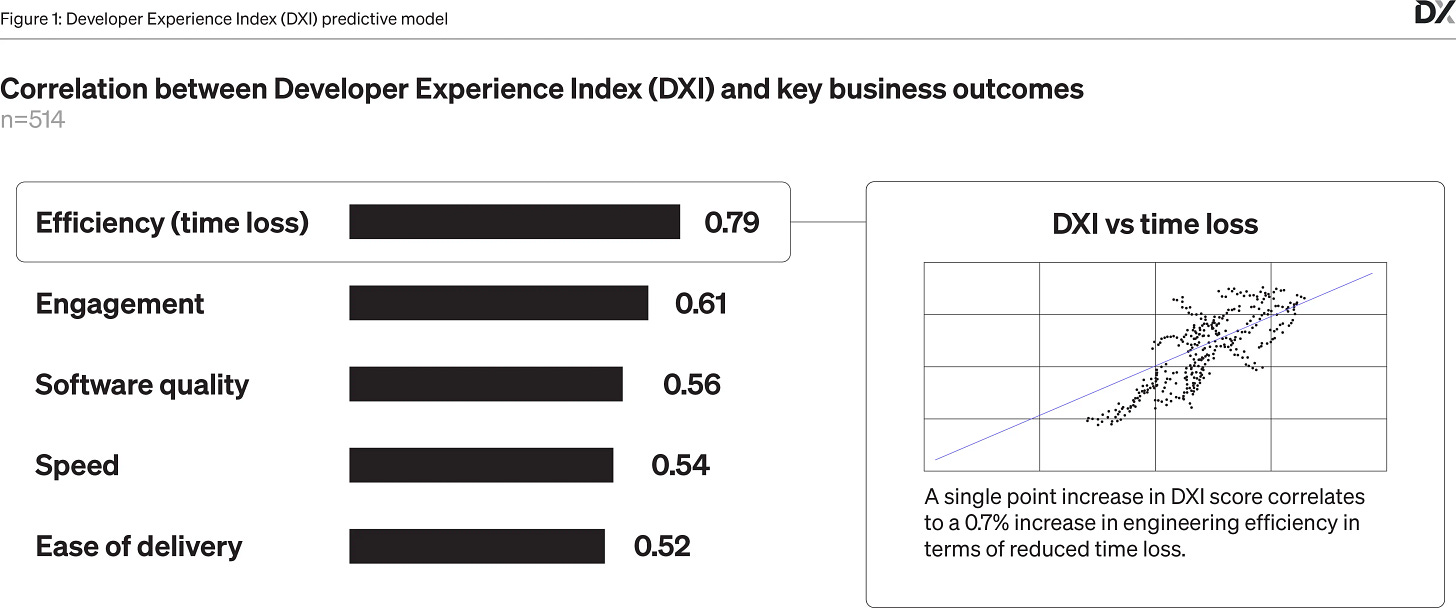

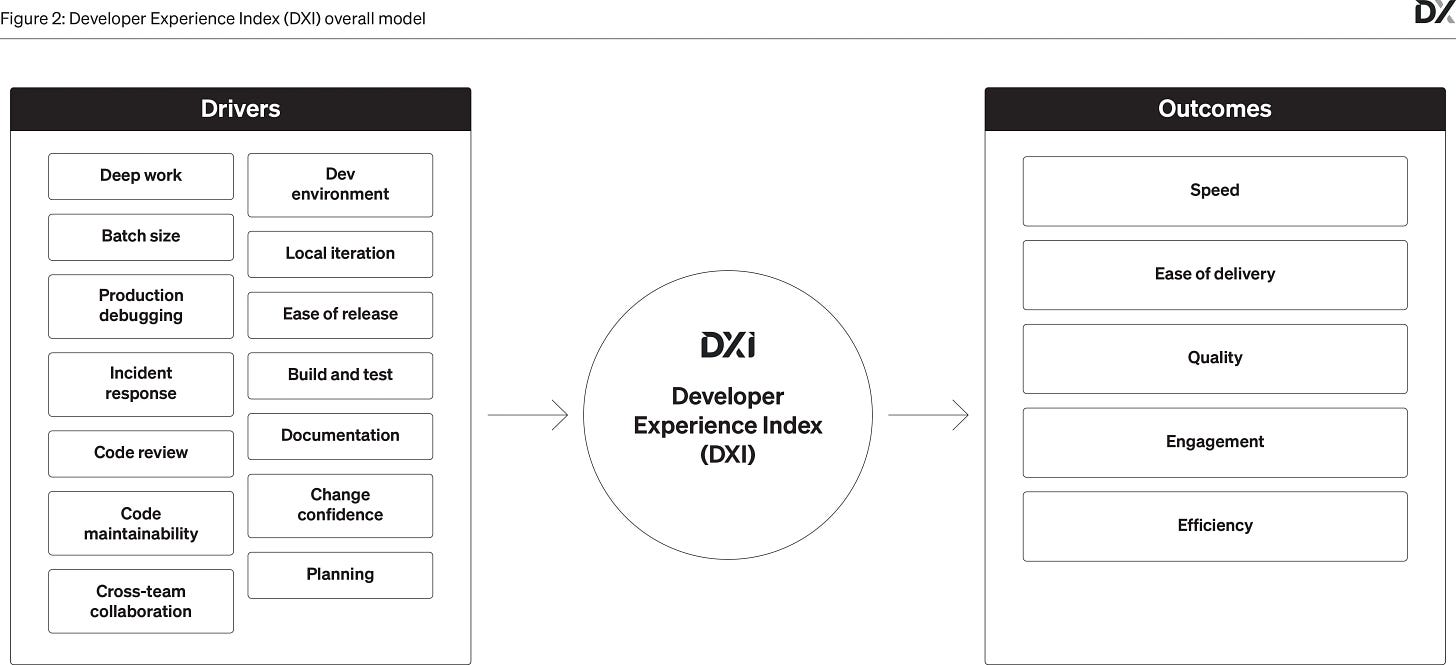

A new entrant into the developer performance measurement landscape. Four key dimensions: speed, effectiveness, quality, and business impact, borrowed from DORA, SPACE, and DevEx research across 300 tech, finance, retail, and pharmaceutical companies.

Connected to:

3%-12% overall increase in engineering efficiency

14% increase in R&D time spent on feature development

15% improvement in employee engagement scores

It’s progress, but not without caveats:

Limited connection made to business outcomes (though it is early, engineering is only a subset of value delivery, and the full picture should always be considered) - the metrics are leading, rather than DORA’s lagging indicators

Lead time and deployment frequency made secondary to “Diffs per Engineer”

The introduction of Developer Experience Index (DXI) as a complicated amalgamation of multiple drivers, much like DORAs key capabilities - and just as likely to be ignored in favor of the lagging indicators

What can you take from all this?

Every outcome we pursue is subject to capabilities and practices. The result of workflow. The investment of time in key areas of focus, and the balance between them.

All the metrics and all the data are downstream from effective action.

An investment in Flow Engineering is the best way to focus on your specific target outcomes, rather than generics, and your unique workflow, rather than abstract metrics.

The best time to start is now, before your plan for the year is set and the status quo has taken hold again.